There’s a quote from Jeff Bezos that resonates a lot with me and that I’ve been using frequently, that says (paraphrasing from memory):

Whenever the data and the anecdote disagree, typically the anecdote is right.

I like that thinking because it speaks to the probabilistic nature of we do in knowledge work as well as ground ourselves in a couple of fundamental realities:

Any model (like when we try to capture some measurements, or want to use data to make decisions) is flawed (or at least subject to flaws), although some might be useful (as another quote from George Box would say: “All models are wong, some are useful”).

Only first-hand experience can fully make sense of what is going on… So any measurement done from a distance, so to speak, is only capturing a frame, or is providing a kind of snapshot.

So, whenever I am using some data point for triggering a discussion, typically in some delivery execution context, I often will preempt it with the reference to Bezos’s short maxim, essentially to open the conversation up by saying I am there to hear about the anecdotes. Put in other words, to hear what might be the disagreeing perspective of the people actually doing the work.

What I am trying to pick up on is also about separating the noise from the signal. What most likely is noise tends to come across as “hoping for the best”. Whereas an actual signal will provide useful context that enriches the conversation, such as:

“We indeed uncovered quite some more scope than originally anticipated, but now we are much more confident that we know pretty much everything that needs to be done.”

“It has been a couple of challenging weeks because we were shorter in capacity and we received more unplanned work than usual; it was kind of perfect storm.”

“We were kind of stuck for a while due to some dependencies, but now onwards most of the work is on our own hands to get done.”

Now, you may be asking yourself: this is all well and fine, but aren’t we then essentially alluding that we are better of just letting the teams to figure out how confident they are in delivering the remaining work?!

Well, in a way I am. But at the same time I’m alluding to how to deal with yet another fundamental reality:

The fact that we all tend to be optimistic about our own chances!

And that’s precisely why and where some metrics, or any kind of objective observation has its place, and utility… To provide that feedback mechanism that hopefully will trigger action when that’s due. Or bare minimum will provide a frame by which a meaningful conversation can happen in a way that challenges that premise of human nature (of tending to be optimistic about what one or a team can accomplish).

To put in other words, the metric can serve a purpose and be trigger to move to deeper thinking. Then it’s up to you (or as a team) to figure out to which extent the (cold) number is capturing a signal or could there rather be a noise (for now) – that’s when the anecdote takes prevalence.

A practical example on delivery confidence

Chances are that, at least for some, what I just described may sound rather abstract… I figure a practical example can come handy. This is one that I’ve been using quite extensively these days. And in fact I have been sharing bits and pieces of that across different posts – which is actually a nice way to give some background to begin with:

I’ve talked about reframing planning as a investment, and grounding what you “invest” against as concrete items that flow through a team’s development process. I showed here I use probabilistic forecasting with Monte Carlo simulations for making a more (data) informed choice on risks to take.

Once you accept the probabilistic nature and that ultimately we set ourselves better to manage value related issues by thinking it as risk, we can also think of adapting the way we position commitments as accepting that nature too – like creating a “now”, “next”, “later” pattern embedded to the period in which we may have to give that perspective (and communicate somehow our commitments).

And finally, I introduced a simple metaphor that helps to create a language that starts giving context to talk about risks as we are going about both making commitments (as a static snapshot) and delivering (as a dynamic process) against those commitments.

In particular, the example I want to use this time around speaks about a way to deal with that ongoing question while delivering:

How confident are we that we will manage to deliver all that has been committed?

Obviously, that can only be meaningfully forecasted with a few overarching assumptions in place:

Context of the team has been fairly stable, so we can use historical data to inform and project on work not yet finished.

Pieces of work to be used for forecasting purposes are visible and managed actively in some kind of system of work (e.g., Jira).

We can create a snapshot of the current understanding of what’s the reimaning work yet to be done which is linked to overarching commitments (or goals) and is measurable in the same way historical data can be sampled (e.g., number of expected work items / stories).

Bottom-line of the current approach I use is that there are two level of work that matters to the teams:

A “team portfolio” level (think of it as essentially the different tracks of work, maybe you know them by names like Epics, Features or even Projects in you context) which describes what has been committed (even if directionally, without a fixed scope upfront).

That “team portfolio” level is broken down (and it’s fine if that’s an ongoing emerging process) into a tactical level of work that actually flows through to the team’s development process (work items like stories or tasks, or whatever you may call in your context).

I then use Monte Carlo simulations to model throughput forecasting distribution for the give team based on a historical data sample (e.g., of 60 days). I keep data at the most granular level (i.e., daily) because that helps to not unnecessarily add to complexity while modeling. But then I roll up the result of each simulation (done 1000 times) to a weekly throughput because that’s more meaningful to make further use for generating insights.

But how do we get to estimate a confidence level of being able to deliver remaining committed work?

Well, the logic behind is based on finding the equilibrium between two numbers: (a) the amount of work yet to be accomplished (essentially the sum of all tactical work not yet finished that is linked to the “team portfolio” level which has been committed or targeted as goals); (b) determine the percentile of throughput (times the remaining weeks in which the work is expected to be finalized) that neutralizes the amount of work yet to be finished.

That is to say – we answer the underlying fundamental question on delivery confidence by answering a slightly simpler one: how much throughput a team would have to consistently get done across the remaining period and how often, given the distribution modelled with the Monte Carlo simulations, that is forecasted to happen?

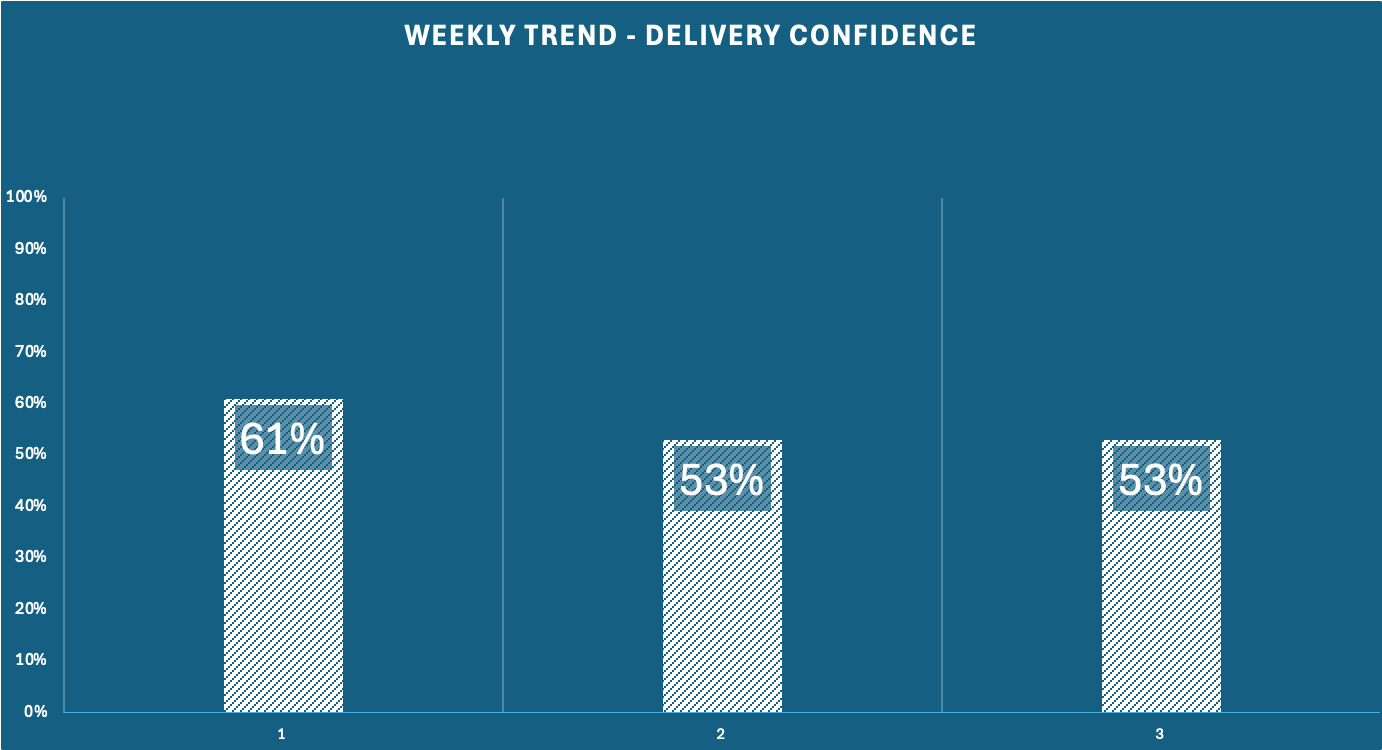

By regularly monitoring the trend, for instance on a weekly basis, we can gain further insight on how things are evolving, and whether there’s a potential need to do something about the forecasted delivery confidence. Always keeping in mind the importance of not taking the (cold) number for granted, but rather as feedback mechanism that can (hopefully) ‘nudge’ meaningful conversations that are grounded and informed by data. And that the data is only as good as it’s not contradicting the anecdote and that only the team can add and make sense of with their first-hand experience.

In other words – providing context to assess to which extent the number can be noise as opposed to a signal (to be acted upon). What may follow can largely vary depending on many factors which not all be under a team control. For the most part, though, should there actions really be needed, they will tend to be around somehow applying some continuously planning practices, focusing on what and by how much the team have autonomy over (even though it may require some level of stakeholder management), e.g:

More margin for adapting goals/commitments: some replaning in terms of number of commitments or goals which are realistic to still purse, totally descoping some work or partially partially descoping some work (to what is really needed).

Less margin for adapting goals/commitments: decide to focus so that the team prioritizes finishing work (“better kick-ass and hitt a few than to do a half-ass job in all”).

I hope that is useful to give some further insight with a practical example, so that we don’t have only rely upon “hoping for the best” when it comes to deliver with confidence. For as experient and discerning a team can be, we are always somewhat subject to human nature and related tendencies. We can do better than that (“hope for the best”) by finding the right balance between using data and metrics, as a tool and a feedback mechanism, while also being mindful it’s human ingenuity that will solve problems in the end, should actions be needed, after some filtering off the noise from the (potential) signals.

By Rodrigo Sperb, feel free to connect, I'm happy to engage and interact. I’m passionate about leading to achieve better outcomes with better ways of working. How can I help you?

I usually find myself pessimistic and not optimistic.

I liked the quote you used though from Bezos. I think it highlights a lot of the issues. The data says this but the customer is saying that. Sometimes folks only want to use the data and ignore the anecdotes, which I get. Anecdotes can be more subjective. As someone who was on calls on a weekly basis and scoring how operations felt with the new tools and processes being delivered, a lot of the operations feedback was subjective. The data says that everything is awesome but meanwhile we are screaming things are not okay. I've also done the opposite myself too. In other cases I've used the data to say your anecdote is wrong. It's a fine balance and line to walk.