Here’s something that has been for a while part of my core beliefs: the obvious is worth reminding. Some time back I learned another insight that further corroborates that idea:

Every day there are new people joining the market. And what may be obvious for you, or us, might be something they’re hearing for the first time.

So today is one of those days when I make an effort to provide a consolidated but somewhat concise version of a few things I have written in more details before, which may be obvious to some. As a kind of “pill” which is easier to swalow and hoping to be useful (either as a reminder or a truly new insight).

First things first, a bit of definitions. When I speak about flow, I mean it both in the strict technical sense of the action or fact of moving along in a steady, continuous stream; in this context, referring mostly to work items in a knowledge work (e.g., software development). But also on a broader sense of its likely implication as a means to learn faster (whether we are wrong).

The “whether we are wrong” while non-crucial (as part of the definition) is important to be spelled out. I take a negative framing because that’s consistent with empiricism and the scientific method: we aim at disproving our hypotheses, not prove them (the latter tends to be more easily manipulatable).

And focus is an enabler to flow, in the sense that for as long as there are too many competing priorities (i.e., less focus) for a system capacity to deliver, things will tend to flow slower. While flowing faster is not a target in and of itself, it helps to achieve better outcome, in line with what we are after – i.e., optimize for value.

That nicely sets the stage to our 3 key reasons “du jour”...

Without focus, set priority may not mean much…

I like to talk about this as being something that should get product managers (or any roles responsible for setting priority with the aim at optimizing for value) absolutely mad!

The more things you have going on in parallel, the higher the chance that what will determine what comes out first won’t be any set priority upfront.

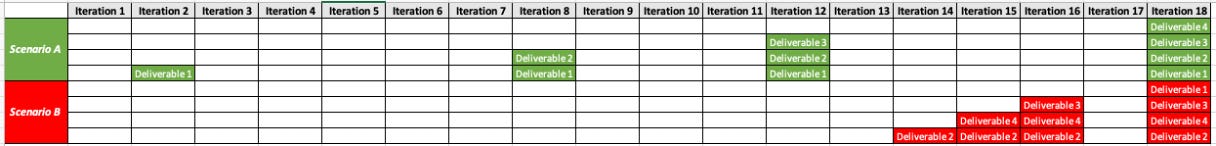

This is somewhat intuitive (or so I hope)... But in case you can use some additional insight, some time back I put together a simple simulation game (explained in this post) – to give credit where due, this is largely inspired by Kanban / flow games out there, typically used as part of related trainings. In this following post, I have shown an example of playing the game, which I promise to you wasn’t forced to have the remarkable illustration it had: the deliverable which was set to have the highest priority upfront, actually came out last in the scenario of working in parallel across the 4 prioritized deliverables.

It’s worth also talking about the “batch-like” behavior of working in parallel (Scenario B) as well (as opposed to the smoother flow when working in sequence; the Scenario A of the simulation). Because that essentially causes a delay in learning (whether we are wrong), thus of increase risks – so there’s an element of risk management as well.

In other words, it’s kind of the worst of both worlds, so to speak, there’s a tendency of set priority upfront not meaning much, as well as we are subject to higher risks. Again, if that doesn’t get product managers (or similar roles) mad, I’m not quite sure what else would do that…

With too much going in parallel, it’s more difficult to make trade-offs

The need for every now and then to have to switch gears is just a reality. Perhaps more importantly, it can be a force for good, if by that we learn how to better adapt to evolving circumstances. In other words, it’s not only a matter of accepting and confronting reality, it can actually be a good thing!

The “remedy” to apply is to have the ability to make clear trade-offs…

If we are to accept this new priority because of evolving circumstances, what else can we stop working on?!

It turns out that conversation is not the same regardless of how many things are going on…

The reason for that being the higher possibility of conflict in re-prioritzing: why should “this” be stopped and not “that”!? That sort of thing.

Put in other words, ultimately right-sizing a team’s batch of ongoing work tracks and the cumulative size of their respective backlogs helps with ensuring a good balance between: (a) giving optionality to teams on what to work on in near future (e.g., in case of unexpected blockage); (b) while also ensuring we have enough space to make adjustment less of burden in terms of re-prioritizing and shuffling things around.

And then there is this: what obsess with value really means

Not that long ago, I wrote a two part series on “what the heck is value”. In the first part, I explored the discipline of trying to put a finger on it, as a healthy thing to do to give clarity on whether something is potentially valuable to consider going after. But then, as a kind of plot-twist, in part two I made the case that once we have done that, we should forget about it, at least when it comes to prioritizng the work on an execution level.

That’s because once we accept that both value and duration (how much time we believe it will take us to deliver) work more as a probablistic thing, and we can’t quite accurately determine them upfront, it doesn’t take a lot to demonstrate through simulation that the best predictor for optimizing value is to focus on finishing “shortest job first”.

In simpler words, by making sure things are small enough (while still being considered potentially valuable) to flow smoothly and fast through our delivery, we increase our chances to get more value realized.

Do notice how all of that nicely circles back to our very definition of why flow matters… It’s all about that focus on learning faster (whether we are wrong)!

Then, what, now!?

Here’s something I still every now and then hear: “Rodrigo, ok, that makes sense… but we can’t be orienting our work towards achieving certain level of baseline of what (faster) flow might mean over here!? There’s a risk that becomes a target and thus loses the ability of being helpful….”

And I get that to some extent, and I definitely acknowledge the risk of turning a measurement to a target. But quite frankly that’s a bit of a "strawman” representation of the perspective I bring to the table…

In the end, having a baseline reference in mind is always helpful as a a kind of mirror. As a feedback mechanism to reflect on the ability to deliver of a system against what “good enough” may look like (in the specific context). It should give perspective and means to practically do something about improving things up.

It does take a bit of – and really it’s not a big one – leap of faith… Which is to accept that often change doesn’t come by direct but rather indirect (or more technically, oblique) action. The point is not to sort of micromanage each and every item being worked upon so to never age against any kind of baseline reference, but rather figuring out what are the more directly in our control levers that will influence towards the outcome we want.

I’m quite convinced that there are two pragmatic levers that will often be helpful to achieve what we are after (optimize for value), through the mechanism we can influence (faster flow that leads to faster learning): (a) keeping a lower amount of items being worked on in parallel; and (b) make sure the items are sufficiently right-sized.

In the best spirit of the scientific method, I’m obviously open for learning that I may be wrong about this. I need to hear the counter-argument though. And not one that distracts and risks misrepresenting the depth of the implications of what I advocate for. I keep both my ears and eyes open…

By Rodrigo Sperb, feel free to connect, I'm happy to engage and interact. If I can be of further utility to you or your organization in getting better at working with product development, I am available for part-time advisory, consulting or contract-based engagements.